Evaluation Agent

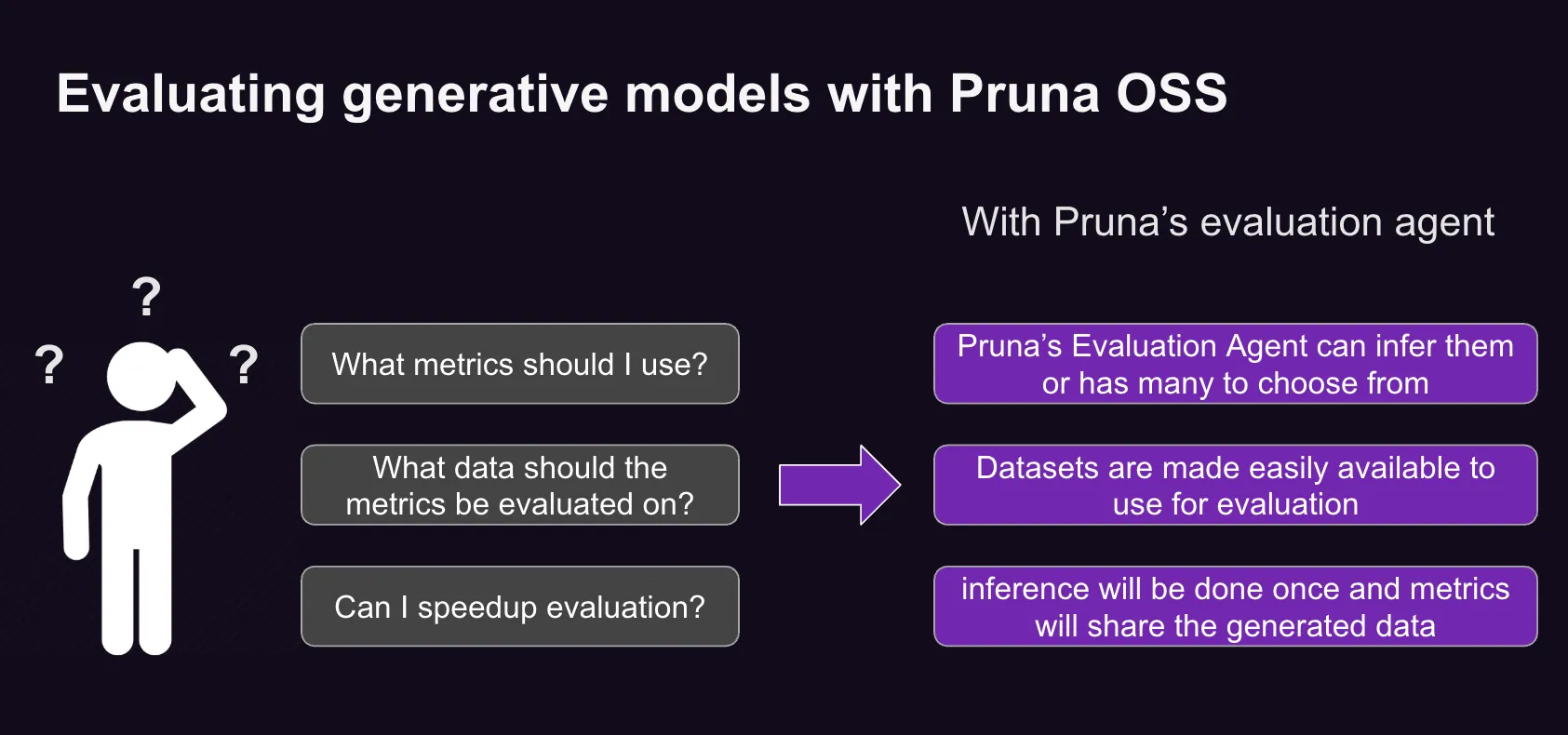

The Evaluation Agent provides two key capabilities:

Recommends and applies the proper evaluation metrics.

Checks whether your optimized (“smashed”) model is compatible with your inference pipeline.

Don't Know What Metric to Use?

No worries — you can describe your evaluation goal as a string request (e.g. "image_generation_quality"). Then, provide:

Your dataset

The target hardware (e.g.

"cpu")

The Optimization Agent will automatically select the appropriate metric and run the evaluation for you.

task = Task( request="image_generation_quality", datamodule=PrunaDataModule.from_string('LAION256'), device="cpu"

Who's It For?

This feature is ideal for teams that:

Aren’t sure how to evaluate model quality

We aren’t yet evaluating models, but we want to start doing it right

It’s designed to simplify model validation even for non-experts.

For more information, please read the documentation.