Evaluation Orchestration

Evaluation Orchestration offers configurable modes and workflows for evaluating model performance after optimization.

Evaluation Modes

Single-Model mode: It evaluates one model at a time. Each run produces standalone quality scores (e.g., accuracy, latency, memory).

Pairwise mode: This mode compares an optimized model against a baseline. The agent uses the first evaluated model to reference and outputs a relative comparison score.

Evaluation Workflows

You can trigger an evaluation using one of two methods:

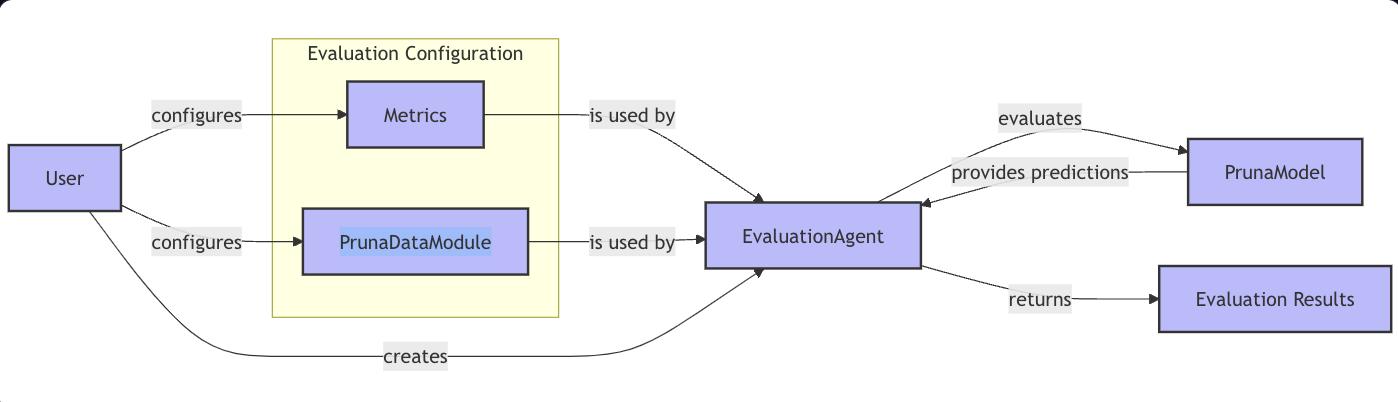

Direct Parameters Workflow

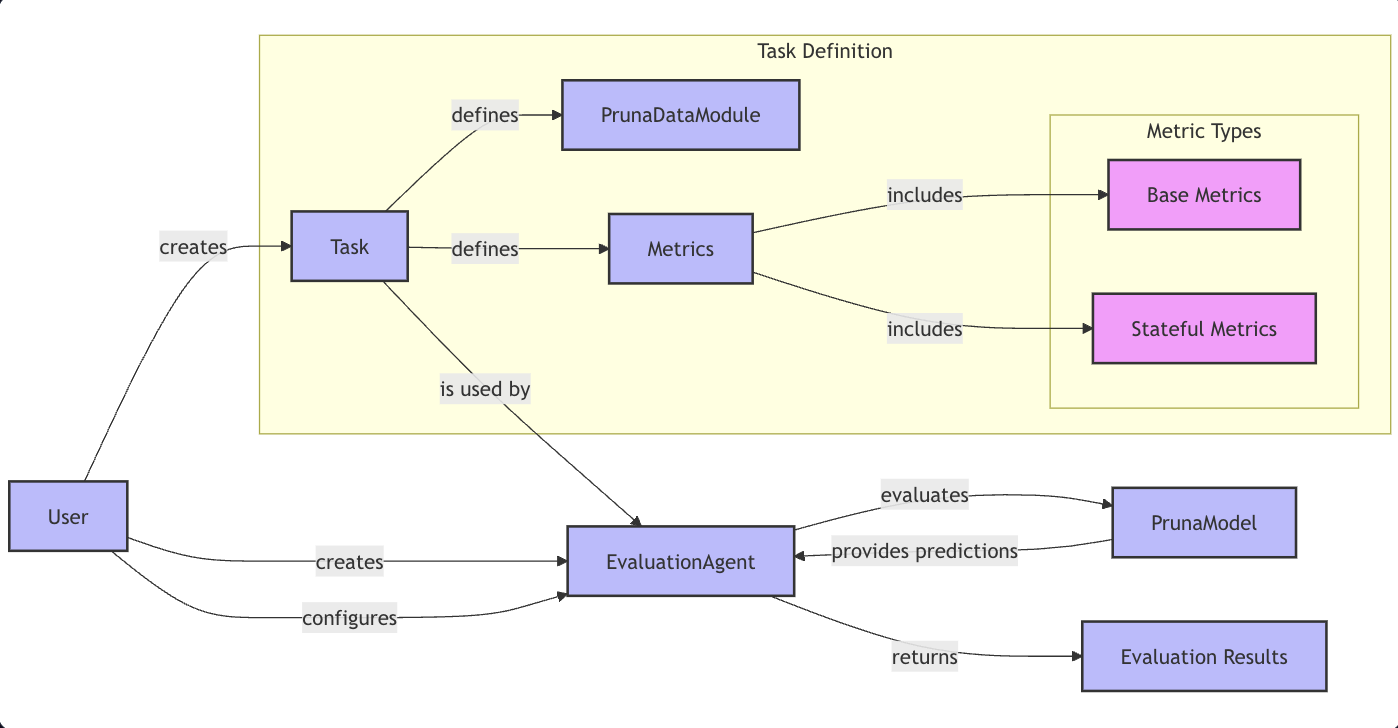

Pass model path, dataset path, metric, and task directly to the Evaluation Agent. This is the fastest way to get started.Task-Based Workflow

Define a named evaluation task with a consistent setup. This is ideal for reusability, team collaboration, or recurring benchmarks.

For more information on the Evaluation Orchestration, please read the documentation.