Compatibility Layer

Compatibility Layer

The Compatibility Layer is Pruna’s bridge to the broader AI ecosystem, making sure your optimized models run smoothly across popular deployment and inference platforms. Whether you’re running on Docker, deploying with TritonServer, building in ComfyUI, or serving with vLLM, Pruna fits right in.

1. Pruna and Docker

Pruna can be deployed in Docker containers, making it easy to set up reproducible, GPU-accelerated environments. With a simple Dockerfile, you can install Pruna (and Pruna Pro), build your image, and launch an interactive container ready for model optimization and inference—no manual dependency wrangling required.

Read the Docker integration guide in the documentation. ↗

2. Pruna and ComfyUI

Pruna plugs directly into ComfyUI, letting you optimize Diffusers models for faster inference inside the ComfyUI interface. Four specialized nodes are added: a compilation node for model speed-ups and three distinct caching nodes (adaptive, periodic, and auto), each offering a different strategy to accelerate inference by reusing computations. You can tune these nodes for the best balance of speed and quality for your workflow.

See the ComfyUI integration details in the documentation. ↗

3. Pruna and TritonServer

Pruna-optimized models can be deployed with NVIDIA Triton Inference Server for scalable, production-grade inference. The workflow: optimize your model with Pruna, build a Triton+Pruna Docker image, configure your model repository, and serve it via Triton.

Check out the TritonServer deployment documentation. ↗

4. Pruna and vLLM

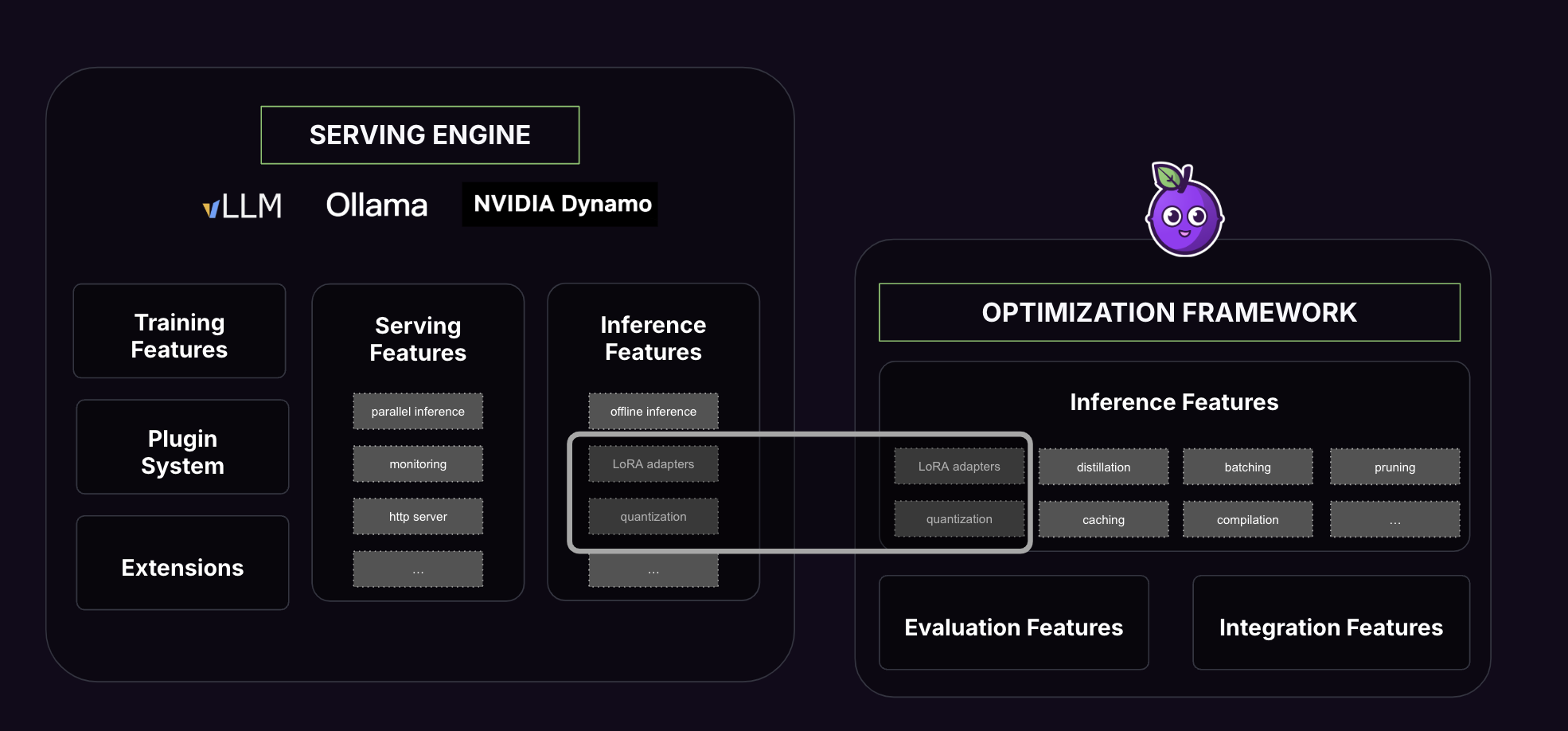

While vLLM focuses on optimizing the serving environment (distributed inference, throughput, infra-level tweaks), Pruna specializes in model-level optimizations. You can use Pruna to prepare models with supported quantization methods for vLLM, but Pruna’s real edge is in combining multiple optimization techniques—going beyond what serving platforms alone can offer. For the most flexibility and performance, Pruna should be used alongside adaptable serving engines or as the optimization layer in your stack.