Evaluation Toolkit

The Evaluation Toolkit is your all-in-one suite for evaluating and optimizing AI models, streamlining the process from metric selection to performance analysis. It brings together four core features:

1. Evaluation Metrics

Get a clear view of how model compression impacts both quality and efficiency. The toolkit covers:

Efficiency Metrics: Measure speed (total time, latency, throughput), memory (disk, inference, training), and energy usage (consumption, CO2 emissions).

Quality Metrics: Assess fidelity (FID, CMMD), alignment (Clip Score), diversity (PSNR, SSIM), accuracy (accuracy, precision, perplexity), and more. Custom metrics are supported.

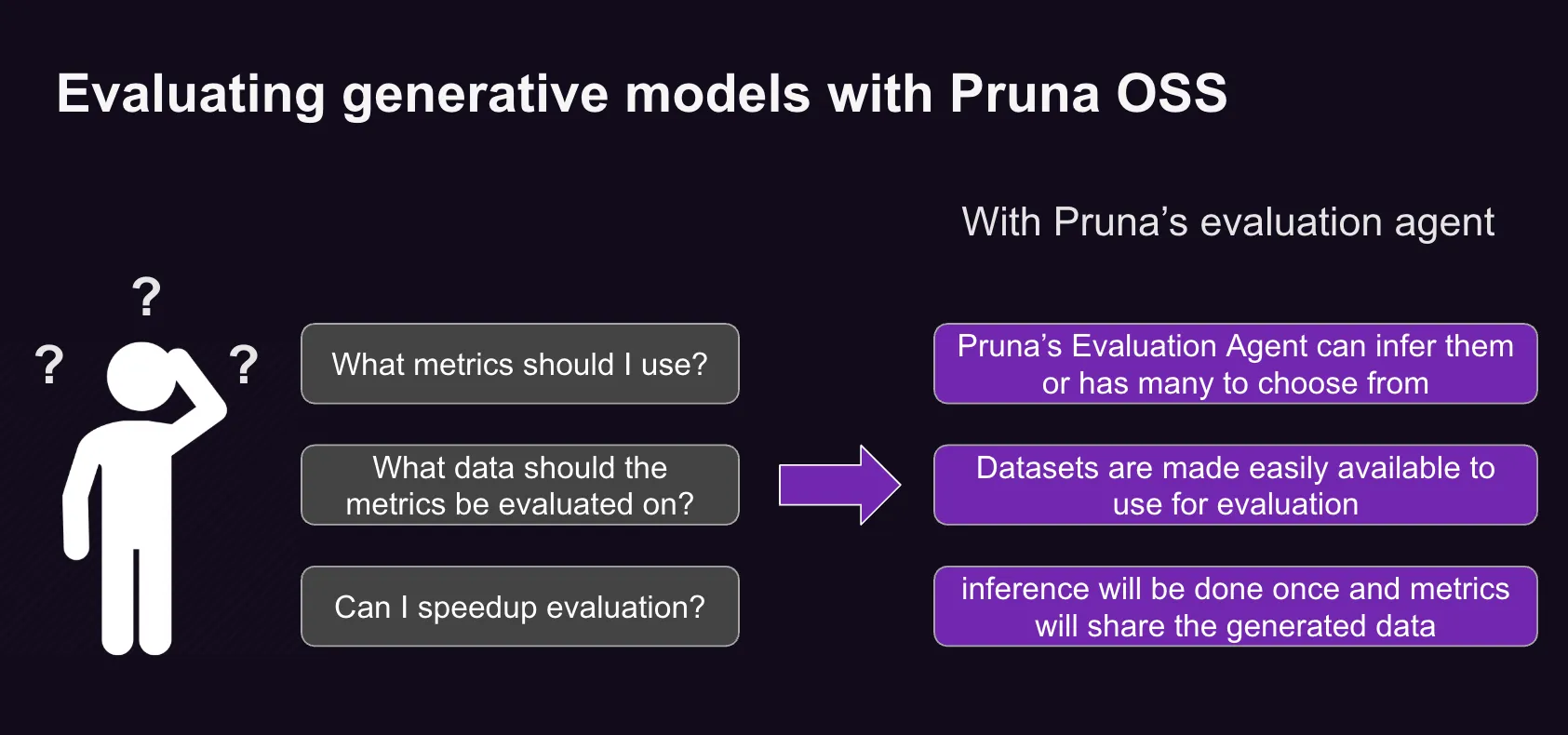

2. Evaluation Agent

Not sure which metric to use? The Evaluation Agent recommends and applies the right metrics based on your goals. Just describe your evaluation target, supply your dataset, indicate your hardware, and the Agent handles the rest. Including checking if your optimized model is compatible with your inference pipeline. Perfect for teams new to model evaluation or looking to simplify validation.

3. Evaluation Orchestration

The Evaluation Orchestration is about making the evaluation process flexible, repeatable, and team-friendly. Whether you need a quick check or a reusable, collaborative setup, Pruna’s orchestration tools help you manage and automate model evaluation.

You can run evaluations with Pruna in two straightforward ways:

The easiest way to get started = Direct Parameters Workflow: pass model path, dataset path, metric, and task directly to the Evaluation Agent.

For reusability, team collaboration, or recurring benchmarks = Task-Based Workflow: define

You also get to choose the evaluation style that fits your needs:

Single-Model Mode: Evaluate one model at a time to get a clear picture of its strengths—like speed, accuracy, or memory use.

Pairwise Mode: Compare two models side by side (for example, your optimized model vs. the original) and see exactly where you’ve made improvements.

4. Evaluation Dashboard (on the roadmap)

We're conducting user interviews to guide our research and shape the feature before development begins. If you're interested in participating, please fill out this contact form, and a member of our ML Research Engineering team will contact you.